I'm Niamh and I've been at Monzo for about a year. I'm the Data Science Lead in our Operations team where I help Monzo serve our customers as efficiently as possible by monitoring our operations, and measuring the impacts of change. One of our key metrics is handling time: how long our customer service staff (known as COps) spend doing a given task. For example, responding to a customer via the app chat. We use this data to make things easier for our customers and our staff, like making sure we have enough COps to support the number of queries we get. To be able to make those decisions, we need to know we can trust our handling time dataset.

Working out if you can trust a dataset is a fairly common task for data scientists. I find it really useful to run through four key checks:

Are the numbers possible?

Are the numbers reasonable?

Do spot checks uncover anything wrong, especially spot checking extreme values?

Does it match some other calculation I can do? Or some other dataset?

We recently used these checks to look over our handling time dataset, but realised we didn’t have another dataset to compare against. We decided to make a new dataset to compare our data against, to give us confidence that our data was trustworthy and usable!

⏰ Handling time and why we need it

We define handling time as the length of time a COp works towards the completion of a task. Usually, this means a COp has a task open on their screen and they aren’t working on something else.

We also track how much time a COp spends using BizOps (the internal tool we use to talk to customers) and how much time they spend outside of BizOps. A lot of our processes mean COps might need other tools to complete a task, like Google Sheets or Slack.

With this data, we can understand

how our COps spent their time, and therefore where our budget is going

which customer queries are the most time-consuming

how many COps we need on a given day

how efficiently we schedule COps vs demand

This helps us make sure we have the right number of COps to serve our customers, and we know which areas or processes need more support. We only use handling time to improve our business processes and tools, not to assess the individual performance of COps.

🤯 The problem: impossible numbers

We started with the first check: Are the numbers possible?

We’ve had handling time data for a while, but in 2021 while doing analysis on scheduling efficiency we discovered that even when a COp was working in our internal tooling we only knew which exact task they were working on 60% of the time. According to our data, COps spent the remaining 40% working on two or more things at the exact same time, which we know isn’t possible!

We found multiple reasons for this, including missing data where:

an ad-blocker was blocking some of our tracking 🙈

we allow COps to use multiple tabs and windows of BizOps at any one time, but weren’t emitting events to tell us which one they were using

We also found we had some incorrect assumptions. For example, we rely on data logic to give the events meaning. If a COp clicks on one thing and then another, is that work? Which task were they working on? This relies on an understanding of how COps use our tooling, and what they’re trying to achieve.

We decided to shadow some COps to help us answer these questions. We saw a wide range of working patterns we just weren’t accounting for in our logic. For example, assuming COps always assigned themselves to one task at a time turned out not to be correct.

Because of these issues, our raw handling time figures were between 10x and 300x larger than the time COps spent logged in. Those figures were completely unreasonable and physically impossible, so we knew we had a big problem 😬

This completely blocked our ability to run experiments or accurately measure impact of changes, so the sole Operations product squad made the tough decision to stop working on our goals, and focus the entire product squad on improving our handling time data.

👀 We got to a reasonable answer

Then we moved to the second check: Are the numbers reasonable?

Our multi-disciplinary team of web and backend engineers as well as product, design and data folks came up with several solutions over the next two months to help us to fix our data. These included disallowing ad-blockers on our internal tooling, new events to help us understand which tab was in use, and simplified data logic that made as few assumptions as possible about what workflow the COp was following.

With these first fixes, we could identify one task per COp for >99% of tracked handling time. The numbers were possible ✅

We saw occupancy (the amount of handling time during a COp’s shift) drop below 100%. This is good! We were actually seeing idle time (which we knew must exist, everyone needs and should take breaks!). The numbers were reasonable.

Step three was to run spot checks for extreme values. We didn’t uncover any issues.

❓But was it the right answer?

Then we hit a bit of a problem. We needed this data to be very accurate, ideally to within 1% of the true value.

We picked this level of accuracy so we could:

run experiments where we could detect subtle changes in handling time required per customer, like the impact of combining tasks together or tooling improvements

use handling time values for forecasting staffing needs (changes of 1-2% in our COps required time have a substantial impact on our cost as a business, so we want to have confidence that we’re making good decisions)

But once the squad had reached the realm of “this data is not obviously completely wrong”, we weren’t sure if any possible change improved handling time or made it worse. We didn’t know whether we were “done” or had more to do. We didn’t have a benchmark to measure our data against. So now we’d reached step 4 of our validation checking process: Does this match some other calculation I can do? Or some other dataset? We didn’t have another dataset to compare to, so we decided to make one.

📼 Initial manual data validation

We came up with a plan to manually measure handling time and compare it to our data. With help from the security and data privacy teams as well as COps managers, we asked COps to opt in to record their screen and upload a video securely so we could check our data against it.

We started out very manually. For each screen recording we had, someone would watch the video, and type out the timestamps and task IDs as the COp in the recording switched between tasks. The whole squad pitched in to validate 4 hours of video, and it was enough for us to determine that for short, self-contained tasks our new data was accurate to within seconds of the true value 🎉

📈 Scaling the validation process

Although it worked to get us confidence in the first set of results, it was immediately clear that the manual process we’d used to make our dataset wasn’t scaleable.

We found:

we got the task IDs wrong 50% of the time when typing them in manually (upper case i and lower case L look far too similar in URLs in Chrome!)

it took 4-5 minutes to annotate 1 minute of video and at that rate, we’d be validating forever, and the cost to scale this across our operation would be very high (we needed to validate 250+ processes, with 60+ minutes of video each)

Because the process was so manual and error-prone, it was hard to trust the data we were gathering, and the data scientists in the team had to go through every piece of data gathered with a fine tooth comb to spot mistakes. We couldn’t use this as a definitive dataset.

The web engineers in the squad came up with a brilliant idea to embed the time and task metadata into a QR code onscreen in BizOps. We could then read this data back out of the videos we collected, allowing us to collect the data much more reliably without manually typing anything out. The web engineers built a tool that the squad, and eventually COps, could use to watch these videos and annotate them with handling time data. The only thing we needed human input for was to stop the video at the right time, and indicate what the COp was doing, for example working in or out of our tooling, or taking a break, with the click of a button. All the data was sent to a Google Sheet and from there into our data stack.

We then scaled this process across lots of different types of work that our COps do. We started to validate our data against the annotation results by getting COps to annotate videos from other COps. We’ve now got data for activity types making up 24% of the work that COps do!

🚀 Validation has already paid off

We quickly identified some problems with our logic for more complex workflows, where COps often switch between multiple different tasks for the same user. These cases were leading us to undercount handling time for these workflows by ~10%. We were able to change our logic with confidence, knowing that it still matched the validation results for previous tasks. We’ve since identified more cases of missing or incorrect data in our events, and are even able to estimate how big a problem each error we find is to understand the impact of solving it.

Now that we trust the data, we’ve been able to run multiple experiments on our internal tooling as well as customer-facing changes. Some of the results have surprised us and helped us re-evaluate how we can be most effective in helping our operations scale, so the data is already proving its worth.

🍰 What we’re working on next

We don’t want this to be a one-off fix to our handling time data only for it to break again in a few months time as our operations and tooling change. We want to avoid the common problem of “data mould”. We need to keep our trustworthiness checks going.

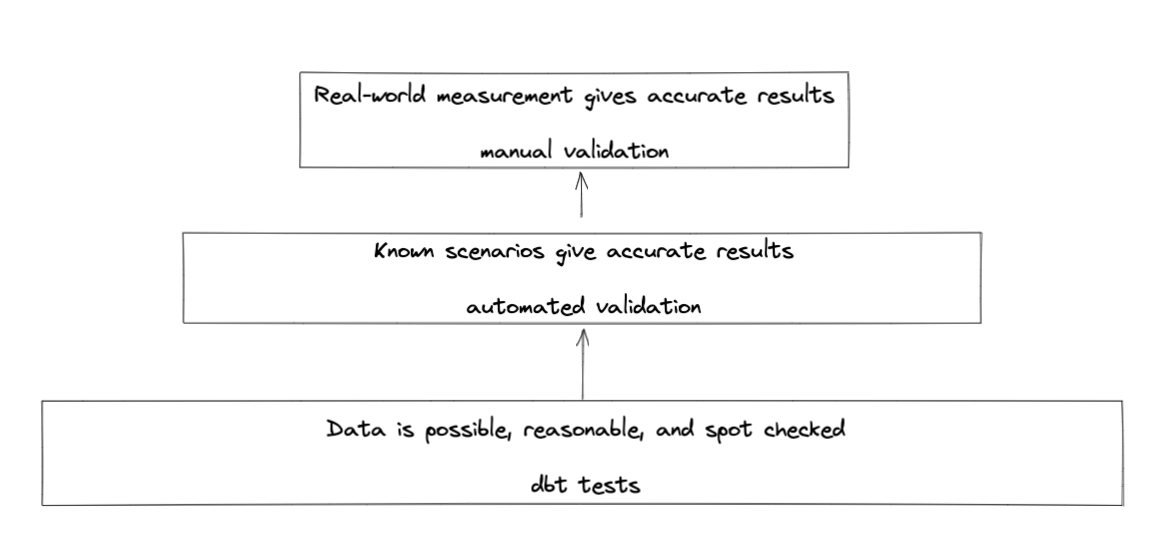

Checks 1-3 were relatively easy to incorporate into the tests that we run regularly on our data with dbt. We’ve written tests to alert us to physically impossible data or an increasing number of outliers, which will protect us from extreme changes to the data.

We’re planning to incorporate some of the scenarios we’ve seen play out in the videos into some automated testing. This is where we automate those actions in a browser and check that the events and handling time output are as we expect them to be, which will help us validate with even less effort, especially once we’ve got a good understanding of what kind of scenarios we need to test.

But we’ll also continue to validate our data manually to have confidence that our data matches the thing we want to measure, and we think this investment in data quality is well worth it. With each of these validation “layers” in place, we’re protecting handling time from data mould while Monzo grows to support more customers and products.

If the process I’ve described, or the work we’re about to do next, sounds exciting, you should come and join us! We’re hiring for data analysts to join our Operations data team.