I’m Sarah and I’m part of Monzo’s Quality Assurance (QA) team, more generally known as our ‘testers’. I help my squad ship amazing features by anticipating what needs testing, I write and fix automated tests, and I test the release before it hits the app stores too.

We want to make the app as bug-free and smooth as possible, but we’re a small team and can’t just rely on QA folks to catch issues before we ship the app. We know our customers love our app for its speed and ease of use, so what can we do?

Everyone at Monzo can test

Just as everyone at Monzo does research, everyone at Monzo can also get involved in testing.

There’s sometimes a bit of debate in the industry about inviting all employees to test your product before it’s shipped, but at Monzo full-time testers aren’t gatekeepers on every change made in the code. In fact there are so many times, both in development and before shipping, that you can test things. There’s a common misconception against ‘everyone doing testing’ that people who aren't trained in testing won't do a very good job of it, but there are a variety of different types of testing suitable to different goals and outcomes. None of these can replace specialist testers, but they can help you ship more safely.

We choose the types of testing we want to use at different times within our squads. Usually a tester, backend or mobile engineer can suggest which testing methods would give us useful information about the feature we’re going to ship.

A lot of the following approaches to testing changes are optional and specific to the project or feature the team is building. We do a lot of risk-based testing, which is where we think about the impact of a change possibly going wrong, and how that will affect customers.

Here are some different approaches that we can use to ‘test’ features as we ship. They are all useful tools to tackle different problems.

🕵️♀️ Quality Assurance (QA) testing

Our team of Quality Assurance testers generally look to see that any new features behave as expected and nothing adverse has happened to the rest of the app. For example, if an engineer makes a change to personal accounts in the app, we want to make sure we haven’t inadvertently broken anything for business or joint accounts. We also test for things like accessibility; are customers with screen readers able to navigate in the app? Are buttons all visible and able to be clicked? Are they even obvious they are buttons?

As testers we raise bugs directly with the team both when testing new features and when generally using the app.

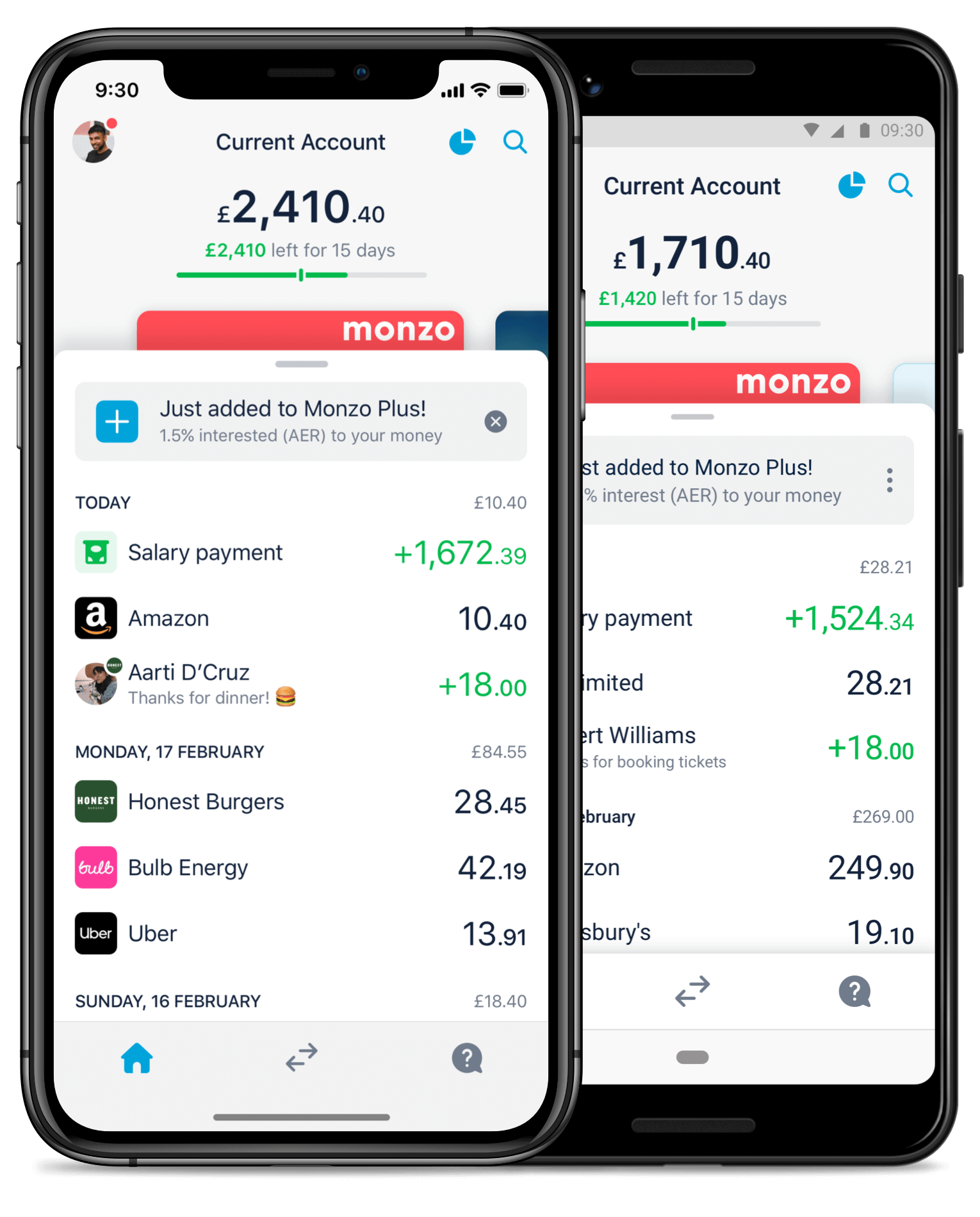

We have a staging environment which looks very similar to the app you use every day, which we use to simulate all kinds of real world scenarios like being overdrawn, getting paid, having a large balance in your account, a loan, Flex payments, or making payments from another country.

We use this staging environment every day to test changes, features, and bug fixes before we want to merge them into the ‘live’ or production environment, which is the one customers interact with.

As well as manually testing, our testers often pair with engineers to write test plans, try out unhappy paths, and look for bugs whilst they’re writing their code. Our engineers already have robust processes in place to peer review their code, and do integration and unit testing, so this additional testing gives us extra reassurance we’re not introducing any issues or bugs.

If we find something in the app acting in a way it shouldn’t, we report it to the relevant team. As testers we usually dig into what might have caused the issue and we try to share as much information about our device setup how they can reproduce the issue to see it for themselves. We also share how severe we think the issue is, how likely it is to affect customers, and which group of customers will be affected.

🤖 Automated testing

We run a regular set of automated regression tests (more on this below) before we release a new app update in the app stores, and we need to do the same kind of tests every week. When we can run tests without manual input from a person, we can find out if anything is broken without committing too much time to it.

We run these tests several times a day because we get quicker feedback when something has broken and we can isolate the root cause much faster.

🚦Regression testing

Before the app update makes it to the App Store on iOS or Play Store on Android, we run a set of regression tests against it. These look at basic functionality like the ability to log in, upgrading to the new release version, signing up for a new account, and making transactions.

We rotate every week so one tester spends a few days checking off both Android and iOS tests, both with automated and some manual testing. The testers report the outcomes to the specific engineers who are responsible for shipping the app to customers that week, and once we are happy with the result, the engineers ship it to the app stores. Check out our post on our mobile release process.

Automated and regression testing covers the main flows in the app, because these are essential. But not everything can be, or should be, automated. Depending on the feature we’re testing, we might want to expand our testing to user research and staff testing. We wouldn’t use automation testing on an experiment that we only plan to try on a small percentage of customers, because it would be lots of upfront work for what might be a small result.

📲 User research

We do user research to either test a prototype, or ask customers who haven't used a feature to see where our own assumptions do not hold true. For example we might think a button is really obvious, but if a customer gets stuck or clicks somewhere else, we can use this information to make it easier for them.

This is a form of testing that is much more about the behaviour, usability and expectation of our customers rather than checking to see if a feature is bug-free.

👯♂️ Team testing (dogfooding)

We work in teams and each team has a focus. Each product team is made up of a mix of engineers, product managers, designers, engineering managers, data scientists, marketing specialists, writers and business analysts.

That whole team will work on a new feature to ship to customers, and they’re in a great position to test the feature from the point of view of a customer. The goal of team testing or “eating our own dog food” is to put ourselves in the customer's shoes and see things from their point of view, build empathy, and increase our understanding of customers' pain points and needs.

Rather than testing for visual issues, pixels being wrong, or functional requirements, the team are looking for improvements in the experience of trying out a new feature. However, any bugs or improvements found along the way are a bonus.

👩💻Staff testing

The great thing about working for Monzo (and a bank generally) is our staff use the app on a daily basis. They go out and spend money, or buy something from the comfort of their sofa, get paid, move money. They use the same functionality as our customers.

The best thing about getting feedback from staff is that everyone has a different set up, for example different devices, operating systems, text size, the volume of transactions, the features they use, the list goes on! Our staff accounts are varied, just like our customers because they are customers too. We have a Slack channel called #product-feedback where our staff can suggest improvements, and let us know if something didn’t work as expected.

Sometimes when we are preparing to launch a really big feature, we allow staff early access to it for a week or two before we launch it to our millions of customers. This gives us a chance to ask for feedback, check if anything isn’t clear, didn’t meet expectations, was confusing, or plainly just didn’t work for some reason. The best thing about doing this is that our staff want to test the feature, they want early access, they become knowledgeable about what we are shipping, and by helping solve those issues before we release the feature, our customers won’t find themselves stuck and can enjoy the new feature with ease.

β Beta testing with the community

Did you know we have beta testers for the app? This is a small sample of our total users that get a less than perfect, early release version of our app. It might have some bugs and issues in those early releases so we rely on their feedback to find any problems early.

We have nearly 4000 iOS beta testers, and over 21,000 Android beta testers for a release, but of those not everyone will download the new release. We create a release candidate, which is a version of the app that has all the new changes we want to offer. We then invite a sample of those beta testers (who do not work at Monzo) to download the app build, and ensure they can still use their app as expected.

If you want to join our group of beta testers you can! If you have an Apple device, join us on Testflight. If you have an Android device, there are a few more steps:

Open the Play store app.

Search for Monzo Bank and open the App Detail page.

Scroll to the Join the beta section and select Join.

Ensure the app has updated once you've been added to the beta program. You can select Update at the top of the App Detail page if it hasn't been updated automatically.

Beta feedback is important, and very useful, we might have a crash related to a specific screen or set of circumstances, or a user finds a unique issue. We love brutally honest feedback from this group. They can say what they like and it won't hurt our feelings. The best feedback is honest and practical and helps us make the best possible product for our customers. The same goes for the Monzo community folks; these are customers who are some of our most engaged, they bleed ‘Hot Coral’ (we see you). They will be the first to say "we think you could have done something differently" or "this doesn’t work".

Despite the value of their feedback, we don’t just give beta testers and the community the same app we would give to our internal testers or staff. We really don’t want to break the app for this group. They are still customers, it is still their bank, and they expect a standard of polish when we ship.

🧫 Experiments test

We run a lot of experiments at Monzo to test our ideas. We might want to try adding a new feature or functionality into the app, and we run experiments to see if our customers like it, use it and get the benefits we hope they do.

We use a lot of data to measure the success of experiments when developing new features, and use a control group to see whether our hypothesis that “customers will love this and use it” is a reality or just hopeful thinking. Sometimes we start by shipping an experiment to 10% of users and if it proves successful we keep rolling out until we get to 100% of customers. For example the newish ‘Manage’ tab on Pots was rolled out very slowly to users, because our ‘feed’ (transactions in and out) is usually pretty massive. There are often lots of transactions for food, holidays, bills, so any changes to these areas can take a fair amount of time to load correctly, and we didn’t want to have any issues rolling this out to loads of customers at once. With experiments, we were able to tweak and fix the experience as we slowly rolled it out to all customers.

🧪 Monzo Labs testing

Monzo Labs is a feature in your Monzo app, (tucked into Settings) that allows you to experiment and try out new features that aren’t automatically enabled for all customers.

Labs can help us with rolling out a feature safely, we choose features that we haven’t completely finished developing, and see what the response is from customers who use it. A feature being available in Labs means we don’t opt any customers into these features, instead they can choose to use the functionality while we keep refining it. Once you open Labs, you’ll see what you can opt into. Recently, we made dark mode available so you can try it out for yourself.

The limitation on this is we wouldn’t want something in Labs that hasn't gone through other stages of testing, as a lot of our customers can use it. Small visual issues aren’t a huge problem, but functional issues are.

🚀 Releasing to all customers

A huge shoutout to our social media team and Customer Operations staff here. Our customers existing and new can run into problems and reach out to us however best suits them. When they report a problem we do our best to find the issue and fix it before any more customers are affected.

In the early days of Monzo we had very few customers and we could roll out all new changes to a few hundred customers. The impact of something going wrong was bad but not too bad. But now we have so many millions of customers that the impact of any issues is much greater.

Layers of testing for best results

Every time we ship a new feature we have to decide which methods of testing are most appropriate to ensure we get the best outcome for customers. Often that means combining these testing techniques. For example, sometimes it makes sense to put features through staff testing, asking the community for feedback, but only adding it to Labs once we’re sure the feature is working as expected.

In the long run, we think that by using a combination of all these testing approaches we will help build Monzo in a way that takes different customer needs into consideration and ultimately works for everyone. If you’re a tester, how many of these techniques do you use? If you aren’t a tester, find out how you could take part in testing in your own company. And, if you want to help us test, join our community and beta testing crew too!

And if you’d like to go further and join our teams, we're currently hiring for Mobile QA Testers, Android Engineers and iOS Engineers.