You’ve probably heard stories of the dreaded ‘mobile release process’ before, right? Stories of brave developers battling through hordes of change requests, JIRA ninjas gathering all the information into a single board, Unix wizards invoking incomprehensible scripts, evil bugs appearing late into the day, and throwing the whole software development land into disarray.

Well, this is a story of how this process evolved over time here at Monzo. It’s a reflection of how requirements change as a company grows larger, and how you can sustain a steady stream of releases as the changes that go into each version increase over time.

Level 1: One team, ad-hoc releases

Originally there was only one team at Monzo that contained mobile engineers. It was called the ‘product’ team, and it contained only a couple of engineers from Android and iOS platforms. Everyone pretty much knew what we were working on at any point in time. In a team of two people on each platform, you are guaranteed to have either written or reviewed every piece of code in the codebase. Since it was so few of us, we didn’t have a lot of things we could release at any point in time.

We’d raise pull requests against the main branch on GitHub, and merge them when we were happy with the code. Whenever we completed a feature, we’d test it manually. During that time, we’d stop merging things to main. This was not a huge deal, as the mobile developers were also involved in testing, and it only took a couple of hours to cover the whole app. We’d then proceed with building a release version of the apps in our laptops, and upload the relevant files to the App Store and the Play store. The product manager in our team also had marketing experience, so he’d help write the release notes based on the feature that just got added.

Once it was uploaded, we’d give it a spin on our personal phone to make sure everything works as expected.

Level 2: Multiple teams

The product team kept growing and at some point it became impossible for us to have meetings with so many people. Eventually we broke off into 3 separate teams with mobile coverage and we were all working on different new features for the app. This meant we had quite a few challenges we needed to tackle, so we came up with different strategies to tackle them.

Branching strategies

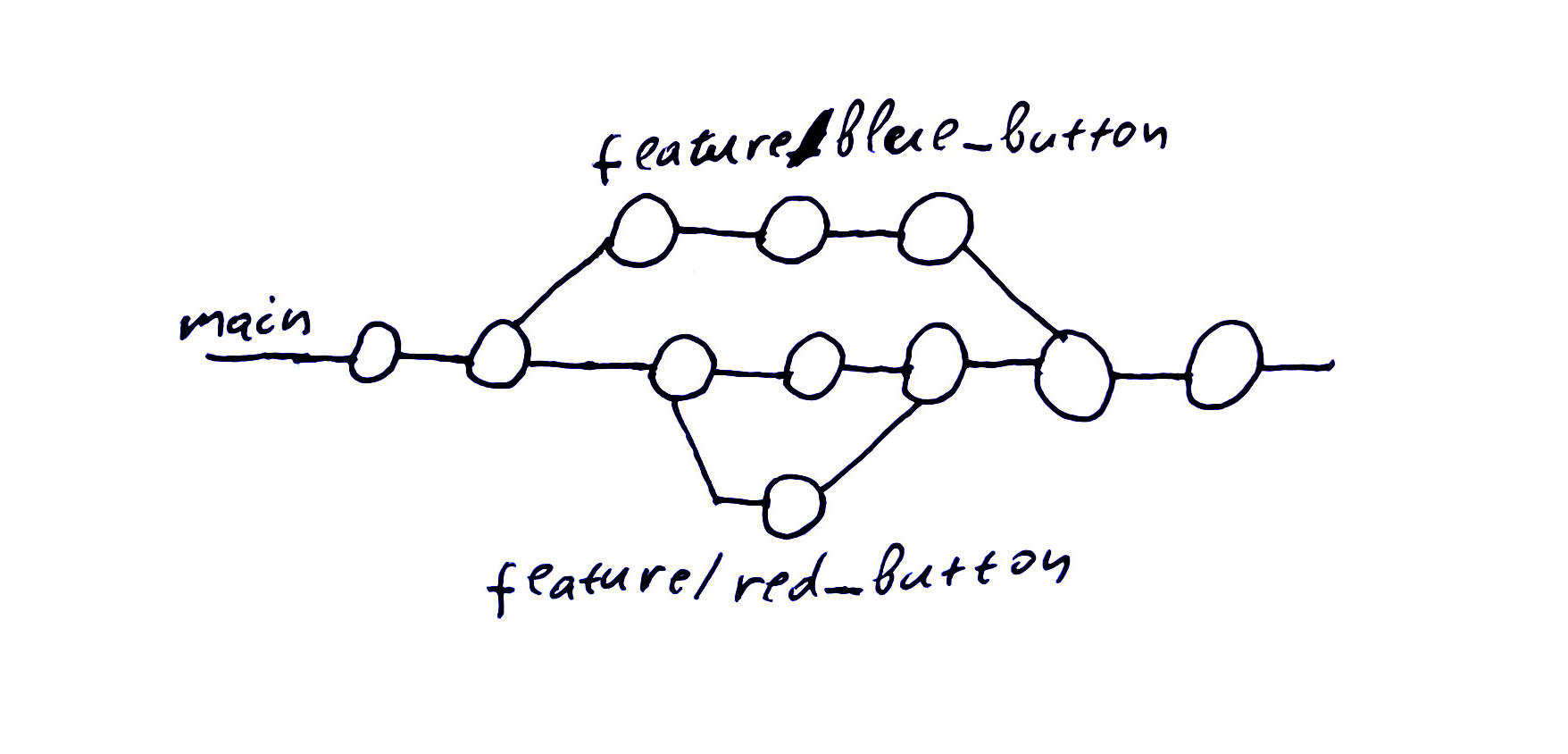

Now we had multiple teams working autonomously on separate features, it was no longer feasible to stop all development whenever we wanted to do a release. So, we introduced a type of trunk-based development. We tried to keep it to a very simple flow, and has remained pretty much the same since then.

For daily development, we don’t use feature branches and instead have short-lived branches for individual changes. We create a branch, make small changes to it with up to 400-500 lines of code, raise a pull request, review it, and then merge that back to the main branch. Of course we also make sure that for every branch that gets merged:

All our tests still pass (so the app still works correctly)

We don’t expose incomplete functionality to the user (more on that later)

When it comes to releasing:

We create a release branch off of the main branch.

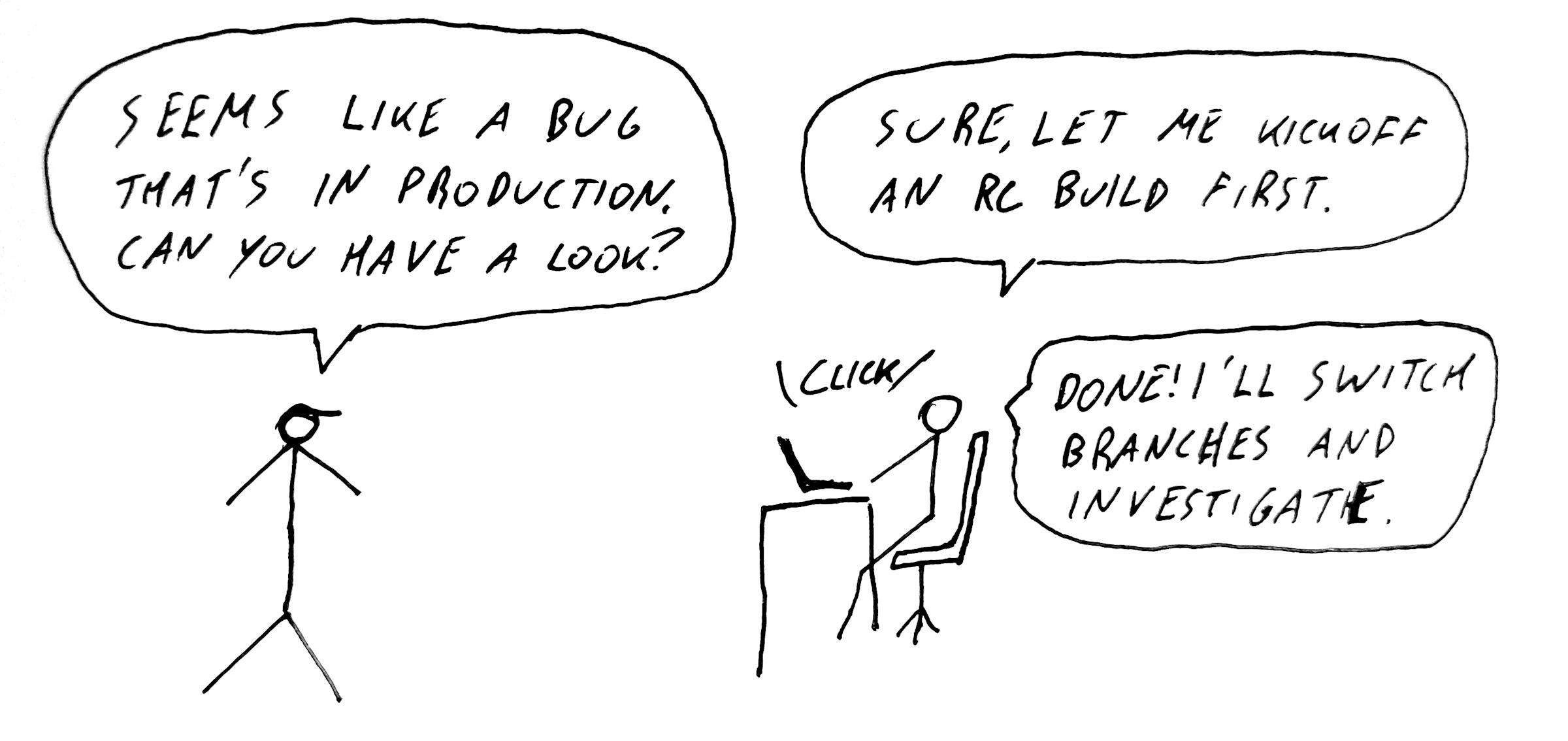

We build a version of the app from that branch to be smoke tested. We call this an RC (release candidate) build. We start with RC-1. If there’s a problem with testing, we’ll make a change to the release branch, and then build RC-2 and so forth.

Once we’re happy with an RC, this will be released to users. How this is done differs per platform, and we’ll cover that later in this post.

We also merge all the changes in the release branch back to the main branch at this point.

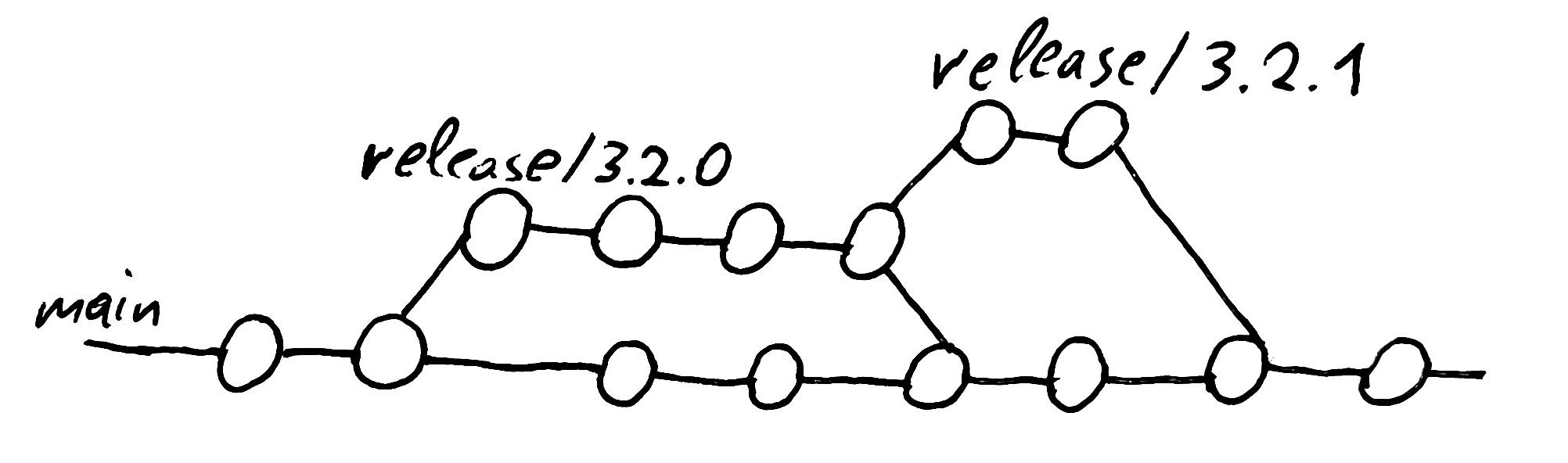

If we find an issue after we’ve released to actual users we can either wait for the next release to fix it or if it’s an important bug, actually short-circuit the process, to fix the issue on the current release. We call this a hotfix, which is like a minor release within a release. We’ll cut a branch off of the current release branch, make the change on the hotfix branch, build an RC, and this time only test the fix because it would be inefficient and time consuming to do a full smoke test here. Then we release the hotfix build to users.

We call ‘smoke testing’ a set of tests we do in order to find possible regressions in important parts of our app. New features are tested individually, right after they get merged to our main branch, but we only do smoke testing during our release process.

Release schedule and versioning

When it came to releasing, it became impossible to do it ad-hoc anymore. One team might have completed a feature, but another might have not. So we arbitrarily decided on a set release schedule of every two weeks. This tends to be the most common cadence in other companies, and we knew we could change it later if needed. We called these intervals ‘release trains’.

Additionally, we introduced a consistent versioning system (major.minor.hotfix) to be able to talk about releases across the whole company.

Release owners

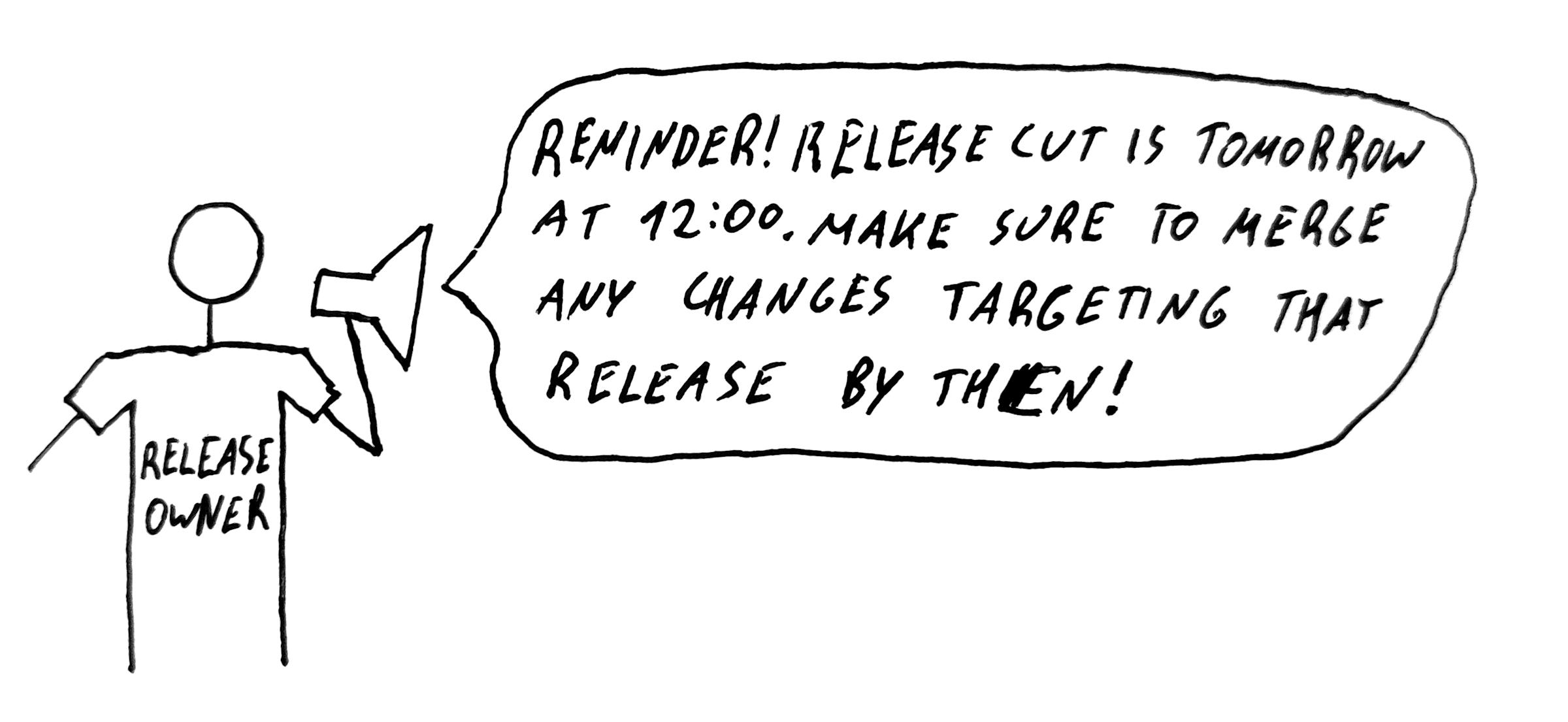

We also introduced the concept of ‘release owners’, the people responsible for actually doing all the relevant tasks that a release required. This could be running git commands, building and signing the release app, smoke testing it, and uploading it to the relevant store. These include an Android engineer, an iOS engineer, and a QA tester. They are also the people responsible for deciding what, if anything, makes it into a hot fix.

Folks take it in turns to be a release owner and we have a set rota for the next few months.

Incomplete features: feature flags

Having a regular release schedule introduced a new problem though: How do we avoid releasing features that are half done to our users?

To get around that, we use a concept called ‘feature flags’. These are a list of boolean flags that the server sends to the client, enabling and disabling features. So when a feature is half-done, we keep the feature flag disabled until it is complete. This service can also enable/disable flags for particular app versions, which makes it easy to roll out features without breaking old apps too. It even allows us to do staged rollouts of those features.

Initially we used a console based tool to talk to the backend service responsible for this, but eventually, since we were using it so often, we built a web interface for it too.

Release retros

Now that more and more people were involved into the release process, we also introduced retrospective meetings for our release process. We run these every 6 months, and they’ve helped drive the rest of the improvements mentioned later.

Level 3: Automation

All of the new parts of our process were great from a collaboration standpoint, but they also meant that the task of actually doing a release was becoming harder and harder effort-wise. A lot of the communication mentioned above, along with the git commands, the versioning, the actual uploading to the store was still being done manually. So we decided to automate more of the process.

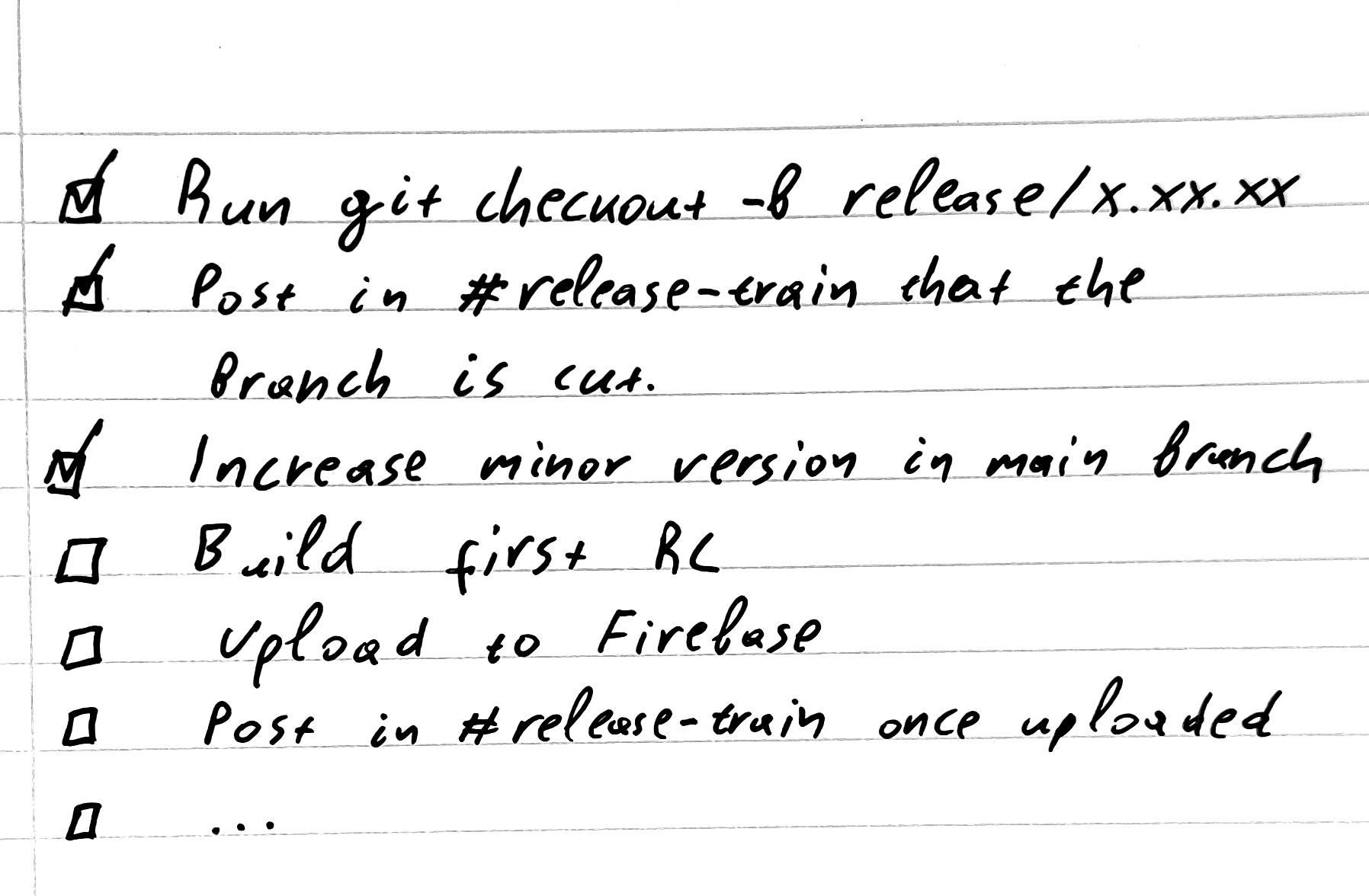

Documenting

After a few quite difficult releases, we decided to sit down, and actually document all the things a release owner needed to do during a release. Understanding our process and having it written down was a crucial first step before we could properly automate it.

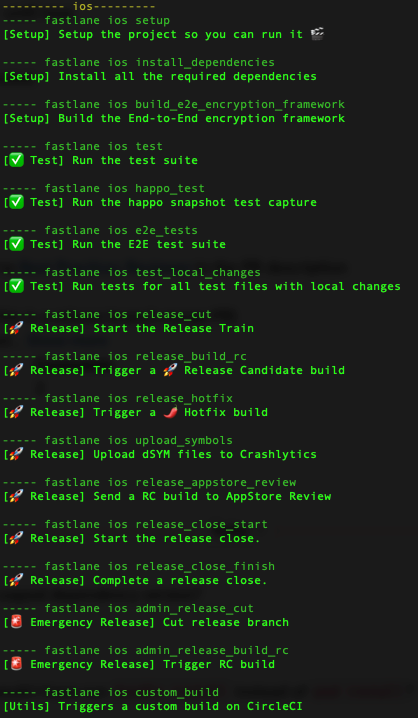

Fastlane

We then investigated all the available scripting solutions for helping with automating a release. The clear winner was Fastlane. It works for both Android and iOS releases, and it has plugins for almost anything we needed. For things that were missing (like posting to Slack), it was very easy to write a bit of Ruby code, and add the support ourselves. The script itself also served as good documentation for the release owner to know the exact steps to follow in each release.

Testing

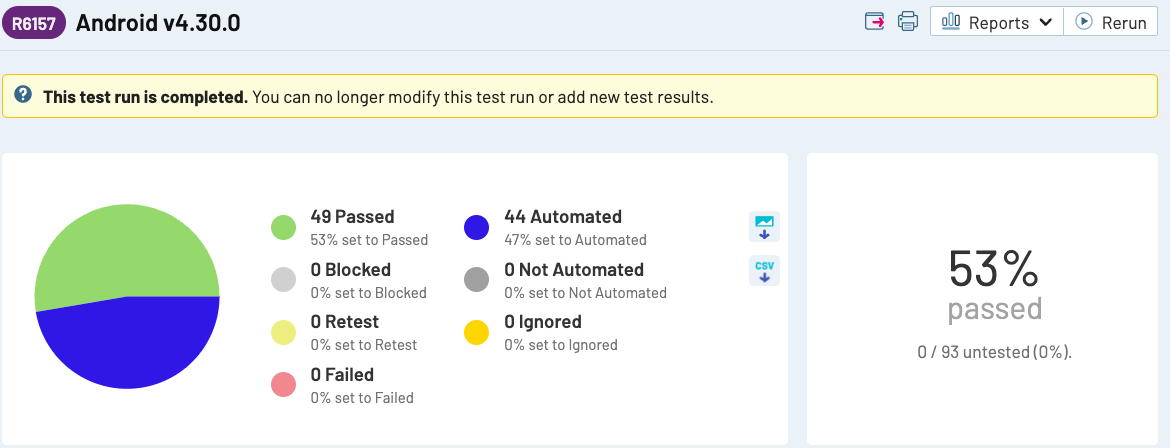

We also started organising our process from a testing point of view. Up until now, we maintained a list of smoke tests for each release in a spreadsheet. This was fine when we only had a couple of product testers, and about 20-30 smoke tests. But as time went on, this test suite was becoming larger with every release - we currently have about 90 quite complex test cases, that we need to run for every release candidate we build. Using a simple spreadsheet was not sufficient to coordinate this process. So after some investigation into the available tools for this, we decided to use TestRail . We could now keep track of all our test cases and test runs in one place.

At the same time, we started writing automated end-to-end tests that would use the mobile apps and our staging environment. We even implemented an integration with TestRail, so that we know which of the tests are automated. Over time, we automate more and more smoke tests, giving us much faster signal on regressions, and leaving more time for exploratory testing. Check out this post by Sarah Byng on How everyone gets involved in testing at Monzo if you'd like to learn more about our approach to testing.

Weekly cadence

After we automated most of the big parts of the release process, we decided we could switch to a weekly release schedule. There benefitted multiple groups:

Product teams benefit because they can release things faster, as they don’t have to wait for a 2 week cycle to release something new.

Users benefit because they get new functionality every week.

The release owners benefit because there’s less pressure to put late fixes into a given release

When something is hard, you need to do it often. By releasing so often, we’ve been forced to improve the process to be as seamless as possible. Releasing every week also allows us to make the schedule within the release quite ‘tight’, so that everyone knows pretty much where we are at the process based on the current day of the week. It roughly works like this:

Start the train on Tuesday. We aim to cut the release branch at around noon. We immediately build the first RC right afterwards.

Between Tuesday afternoon and the end of Wednesday, we do smoke testing, and build more RCs if necessary.

On Thursday morning we release to beta testers: ~25,000 users across both platforms. We use the Play Store ‘beta track’ for Android, and TestFlight for iOS.

On Friday morning, we release to 10% of our users on Android, and we submit the iOS app for review to the App store (since it takes a couple of days to get approval).

On Monday, if all is well, we release to 100% for Android, and to everyone on iOS as well. The cycle begins again the next day.

Level 4: Remote build and sign

This process worked well for some time, especially after we automated it, but there was an important problem that still needed addressing; the building and signing of release candidates was still done on our local machines.

This was a major security concern. In theory anyone with access to one of those developer machines could build, sign and upload anything they wanted, posing as the latest Monzo app.

It was also really inefficient because building a release version of the app takes between 20 minutes to an hour, and during that time, all the resources of the laptop are used, rendering it pretty much unusable for other tasks. For release owners, this meant a significant amount of time was lost during the week, simply waiting for builds to complete.

The other difference was that at this point we’d grown enough as a company, that we could spare a whole team’s time (we called it the ‘mobile platform team’) to focus entirely on this problem. As a result, we implemented one microservice for each platform running on our backend to automate the release process. Now, the bulk of the work is done remotely. The process to build a release candidate looks something like this:

The developer runs a local Fastlane command. That does an http request to the relevant release service.

The release service will trigger a build job in our CI tool (we use CircleCI)

CI will build the release version of the app

At this point the process differs between iOS and Android:

For iOS, the app needs to be signed on a Mac machine, so we build and sign it in CircleCI. Once the CI job is complete, the release service downloads the signed app file.

For Android, the app can be signed inside our service. So CircleCI simply builds an unsigned version of the app. Once the CI job is complete, the release service downloads the unsigned app file and signs it.

The rest is pretty much the same between platforms:

The release service will then upload the signed release version of the app to Firebase (for Android) or AppStore Connect (for iOS).

The service will also notify us in Slack that the RC is now available to be tested.

All the while, the release owner can carry on with their day with no need to wait for the build to complete before doing something else.

Another very important benefit of having an actual team work on this is documentation. We now had the time to actually write proper documentation of how the release scripts and the services work, a cheatsheet of how to use them when you’re a release owner, and even instructions on how to contribute to the process if needed.

Where we are now

We’re always looking to improve the process. Based on feedback fro our regular retrospectives, we’ve recently introduced a few more improvements.

We decided to invest all of the time saved due to the above changes back to the mobile engineering discipline. For the duration of the release week, release owners are not part of their squads. Instead, they can focus on projects that don’t necessarily improve the product, but improve the efficiency of the discipline as a whole; things like modularising the codebase, using a new database library, further improvements to the release automation etc.

We automated a large part of our smoke tests. Currently, 50% of our smoke test suite is automated. This could be a topic for a whole different blog post though...

We introduced a nightly program. Staff members are asked to join an email list (we notify them via the Monzo app), and they get to receive updates every day. This means we can get feedback way faster - issues that we catch on the nightly build never make it to external users.

But we also have a few more unsolved issues:

We’re still struggling with what to put in our release notes because there isn’t often much to say in such regular releases and any notable features are usually hidden behind a feature flag, and released several weeks after the mobile code has changed, so we can’t really talk about them

We also want to find better measures to know if a release went well or not, for example the number of hotfixes is a good indicator, but ideally we’d like something more granular

We’re still having issues every now and then with things like the Apple or the Play store review process, which are hard to predict and identify patterns

We’re always looking for ways to improve our processes, and learning more as we go along. We are also looking for people with the same attitude. If you want to join us, check out our open roles.